No audio available for this content.

Accurate localization underpins modern mobility, powering everything from precise rideshare pickups and efficient deliveries to augmented reality and autonomous systems. Yet achieving reliable sub-meter precision with commodity hardware remains one of the field’s central challenges.

A range of technologies are being explored to improve positioning, such as real-time kinematic (RTK) and Precise Point Positioning (PPP) corrections, 5G methods standardized under the 3rd Generation Partnership Project (3GPP), simultaneous localization and mapping (SLAM), light detection and ranging (lidar), inertial measurement units (IMUs), and ultra-wideband (UWB). Each plays a role in specific contexts, but for everyday, mass-market deployment, two paradigms dominate the conversation: visual positioning systems (VPS), which rely on cameras and computer vision to match images against reference databases, and GNSS plus inertial measurement unit (GNSS+IMU) sensor fusion, which integrates satellite positioning with inertial data already present in billions of devices.

These two approaches are not mutually exclusive. VPS works best in dense urban areas where GNSS can struggle, while GNSS+IMU excels in the open environments where VPS has fewer features to recognize. In practice, VPS even depends on GNSS to help narrow the search space in its visual database. That makes the two technologies natural complements, and together they provide the building blocks for the next generation of spatial intelligence.

The Role of VPS

VPS use computer vision to determine position relative to known landmarks. In favorable environments – especially dense, feature-rich urban settings — they can deliver impressive accuracy. VPS has been successfully applied in AR anchoring, pedestrian navigation, and even some indoor mapping, offering a level of precision that is difficult to match with GNSS alone.

At the same time, VPS faces challenges that limit its ability to scale as a standalone universal solution. Maintaining vast libraries of reference imagery requires constant collection and refreshing, even for companies with resources such as Google’s Street View. Keeping cameras active and running neural network matching consumes power and compute, with AR and navigation apps often showing rapid battery drain when vision pipelines are engaged.

Performance can also be fragile, with accuracy dropping in low light, bad weather, or environments with limited features such as open fields or glass-heavy corridors where reflections distort recognition. Because VPS requires continuous camera use, it also raises privacy concerns under regulations like GDPR.

But VPS still fills an important feature set: it works best in exactly the environments where GNSS struggles most. In dense urban areas with abundant visual features but heavy multi-path interference, VPS provides a complementary capability that enhances overall localization performance when paired with GNSS+IMU.

GNSS+IMU Fusion

GNSS provides global reach, but smartphone accuracy typically ranges from 3m to 5 m. This may be adequate for turn-by-turn navigation, but it does not meet the precision required for lane-level guidance, pedestrian navigation or building entrances. Pairing GNSS with IMU data changes that equation by adding orientation and motion context.

Sensor fusion combines GNSS position (x, y, z) with IMU-derived orientation (α, β, γ) to deliver six degrees of freedom (6DoF). In practice, this allows devices to determine not only where they are, but also which way they are facing, which is critical for navigation and AR anchoring.

Another key advantage is that fusion also runs efficiently on-device, using low-power sensors already embedded in nearly every phone. It avoids the battery drain and compute overhead of vision-based methods, remains resilient in poor visibility, and largely sidesteps the privacy concerns associated with continuous camera use.

Together, GNSS+IMU and VPS offer complementary strengths: GNSS+IMU provides scalable global coverage, while VPS adds value in dense urban or visually rich environments. Used in tandem, they extend reliable sub-meter localization across a far wider range of real-world scenarios.

Performance in Field Tests

Independent field testing has underscored the impact of GNSS+IMU fusion in real-world conditions. In trials conducted in Louisville, Colorado, standard smartphones relying solely on GNSS averaged ~1.9 meters of error. When collaborative corrections and IMU fusion were added, mean error dropped to ~0.55 meters – a more than threefold improvement.

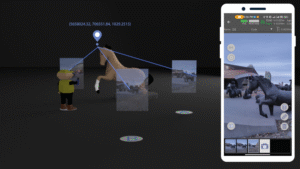

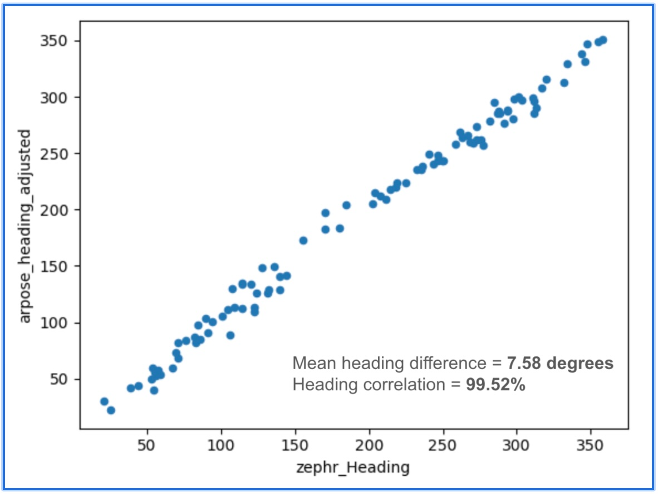

To benchmark localization performance against visual methods, we compared heading determination from Zephr’s sensor-based approach with Google’s VPS, widely considered an industry leader in vision-based localization. Using the same device and location, headings generated from ArPose and Zephr were plotted against VPS outputs.

The results in Figure 1 show a strong correlation, with a mean heading difference of just 7.58 degrees and a heading correlation of 99.52%. This provides a useful benchmark, illustrating that sensor-based approaches can achieve heading accuracy on par with vision-based systems while avoiding the data, compute, and privacy burdens tied to continuous camera use.

Head-to-Head Comparison

When considered side by side, VPS and GNSS+IMU reveal distinct strengths. VPS delivers high accuracy in dense urban environments, where GNSS can be degraded by multipath or blockage. GNSS+IMU, meanwhile, provides consistent global coverage and efficient performance in open environments where VPS has fewer features to recognize. Taken together, they form a complementary toolset, with each addressing the other’s gaps.

- Cost & Infrastructure: VPS offers detailed visual positioning but requires continuous investment in capturing and updating reference imagery, which can run into petabytes of data and demand large-scale cloud storage. GNSS+IMU leverages existing satellite constellations and commodity sensors already embedded in smartphones, scaling naturally without additional infrastructure.

- Battery & Compute: VPS enables precise landmark recognition but must keep cameras active and process high-resolution frames, a pipeline that consumes energy and compute. GNSS+IMU fuses lightweight sensor readings on-device, sustaining real-time performance with minimal power. Hybrid systems can use VPS selectively for visual anchors when power budgets allow.

- Environmental Robustness: VPS excels in dense urban cores where landmarks are abundant, but its performance can degrade in low light, heavy weather, or feature-poor settings such as highways or open fields. GNSS+IMU continues to perform in most outdoor environments, with IMUs bridging short GNSS gaps in tunnels or urban canyons. Together, they extend reliable coverage across diverse conditions.

- Privacy: VPS provides visual context but depends on continuous camera feeds, which can raise concerns under regulations like GDPR and CCPA. GNSS+IMU relies solely on inertial and satellite data, which can be anonymized and processed on-device. Privacy-conscious applications may favor GNSS+IMU as the default, while invoking VPS in controlled contexts.

- Scalability: VPS delivers strong results in mapped geographies but is constrained by the cost of collecting and maintaining visual data globally. GNSS+IMU scales as more devices ship with standard GNSS receivers and inertial sensors, with accuracy improving further when devices contribute corrections to a shared network. In combination, VPS can add value in high-density urban corridors where visual richness offsets its infrastructure demands.

Beyond Accuracy: Spatial Intelligence Without Cameras

GNSS+IMU fusion not only narrows positioning error but also provides contextual awareness. By combining positional vectors with device orientation, systems can determine not just where a device is, but what lies within its field of view.

This contextual layer enables landmark-aware navigation and natural AI interactions. Instead of vague coordinates, users could be guided to “meet at the blue mailbox next to the coffee shop entrance.” In AR, digital content can be anchored to the physical world without the overhead of vision-based methods. And for AI interfaces, assistants could answer spatial queries (“Is the restaurant to my right or left?”) with precision that feels intuitive.

While GNSS+IMU avoids reliance on cameras, VPS can still add complementary value by providing visual anchors in feature-rich spaces. Used together, the two methods create a more resilient and adaptive localization system, able to support a wider range of real-world scenarios than either could alone.

A Clearer Path Forward

VPS has proven valuable in research, robotics, and AR demonstrations, particularly in dense urban environments. But its reliance on imagery, heavy compute, and continuous camera use makes it difficult to scale as a universal solution for sub-meter accuracy.

To unlock the next generation of spatially intelligent applications, from context-aware assistants to immersive AR, localization must be both practical and massively scalable. This foundation will come from GNSS+IMU sensor fusion, complemented by vision-based methods where they add value. GNSS+IMU builds on infrastructure and sensors already present in billions of devices, delivers efficient on-device performance, and avoids the privacy tradeoffs of camera-based systems.

As positioning becomes the backbone of spatial AI, the evidence points to a decisive outcome: the future will be multimodal, but the scalable backbone will be GNSS+IMU fusion since it empowers devices to understand and interact with the world reliably, with or without cameras.